At some point in the last ten years, LeetCode-like interview/screening platforms re-invaded tech and beautifully inserted themselves as the new norm.

These are the kinds of platforms I’m talking about: CodeSignal, Arc, Codility, Hackerrank, CoderByte, and CoderPad. I’m gonna use ‘LeetCode’ as a general stand-in for them in this article, as that has become synonomous with the class of algorithmic problems usually posed by such platforms.

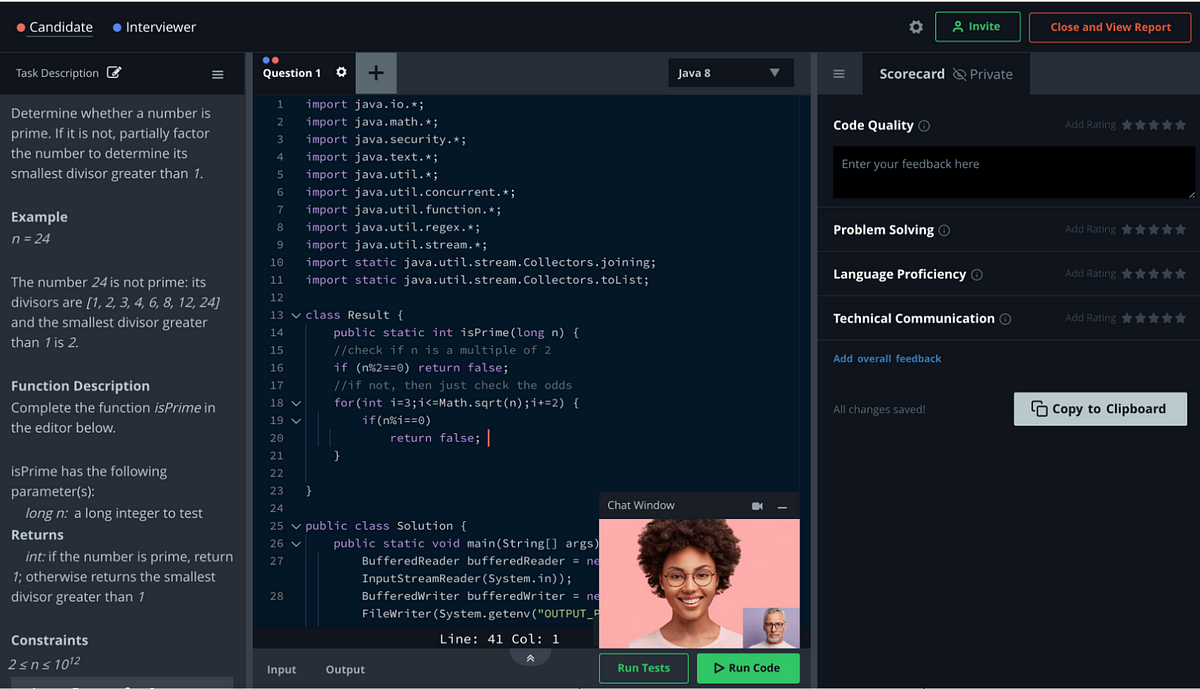

CodeSignal and others are usually online-only browser IDEs that pose coding challenges and ask engineers to solve them in a given window of time. Crucially, you must complete these challenges within the IDE itself so that any potential cheating can be caught. Yay! Sometimes they allow the interviewer to pop in and monitor you, ya know, like that creepy manager that appears over your shoulder? In fact, it’s even better, because they can see a video recording of every single character you type and re-type and copy and paste and ponder. You are-remember this–an input/output machine, right? Not really human, and now much less useful than your machine-LLM replacements. :)))

Honestly, I thought this was already a solved problem. I thought that we’d already gotten rid of this crap? And dispelled the notion that it provides good signal? The engineers of 10–20 years ago already fought the premise that binary-sort or linked-list implementations were a good proxy for general ability. But, the dogma persists! It’s just so … inviting as a concept I suppose.

And these magical browser IDEs allow a whole new exciting level of invasiveness and enforcement and automated analysis of human competency 🙂 YAYAYAY

But, ok, fine, let’s do the rigamarole again. These tests, are, to me, hogwash wrapped in cool branding, enticing hiring managers and time-poor startups with promises of efficiency, de-biasing, and attracting truly top-talent. But to avoid the level of rhetoric I would most like to apply to this mess (it would be far too rude), I’m going to dismantle the premises and common defences one by one.

“It assesses raw intellectual horsepower and coding ability”

No; it predominantly tests pattern-matching ability, not general intelligence or problem-solving skills. Memorizing obscure algorithms and detecting trick questions requires a particular cognitive style — focused on details, recall, and speed.

But raw intellect manifests in many forms. True intelligence (perhaps not something your firm is optimizing for???) is illuminated through discussion of complex concepts, applying knowledge in unfamiliar contexts, synthesizing disparate ideas, and communicating compelling visions. The leet-code gang of apps test none of those higher-order thinking abilities. They reward grunt work & grinding, not innate intellectual talent.

The pretense that LeetCode evaluates some “pure” intellectual horsepower is simply elitist rationalization of an arbitrary hoop candidates are forced to jump through. If the goal is assessing versatile intelligence, open-ended discussions of technology and engineering tradeoffs are far more enlightening than isolated algorithmic trials.

“It provides an objective and unbiased comparison.”

Nope, sorry. Also, btw, it’s hilarious that you think this.

These LeetCodee-things massivelly privilege candidates from a traditional academic CS/Math background while filtering out talented engineers from non-traditional paths. The emphasis on computer science trivia and speed inherently biases the process against qualified candidates who think and work differently.

And–HUGELY IMPORTANT–the harsh time constraints and pressure-cooker environment tend to disproportionately disadvantage underrepresented minorities and people with disabilities and neurodivergences. How.. ironic. Or hilarious. You were trying to avoid bias, no?

In reality, great engineering involves aesthetics, communication, intuition, user empathy — subjective skills these platforms completely ignore. Maybe such things will be fairly assessed further down the funnel right? … Unless, umm, you don’t make it that far :) :)

“It’s useful to see how candidates fare when presented with a real problem; leetcode does that!”

No; these puzzles bear little resemblance to the actual challenges engineers face day-to-day. Real engineering problems involve researching ambiguous requirements, collaborating with teammates, making tradeoffs amidst constraints, and building maintainable systems over time. LeetCode tests none of those skills. Optimizing algorithms in a contrived coding challenge reveals nothing about an engineer’s competency in communicating, collaborating, designing architectures, or shipping production code.

Alas, real engineering happens in a complex world, not simplistic fabricated scenarios. If the goal is assessing problem-solving skills, open-ended take-home projects and discussions of past experience are far more predictive of success than isolated algorithmic posturing.

“It acts as a minimal viable entry threshold. If they can’t get through this, we’re certain they’ll fail every other part of the funnel.”

Ok, I don’t think we’re gonna see eye to eye, but I’m gonna reiterate: these little puzzles don’t resemble the actual complex challenges engineers face day-to-day. They are different things. You are testing a fisherman by giving them a little rod and a pond. But if they’re used to skippering a trawler across the north sea, they’ll fail your little game.

What’s worse, is that no evidence will be available to you that shows you how wrong you are, because you’ve wholesale decided this is the optimal approach. The thing is, you will get a nonzero amount of fish at the end of the day, and so you’ll take that as evidence that your process is working. And if, gosh, it doesn’t work, you’ll presume that some other part of your process is at fault. So you’ll hammer at other things until your funnel improves.

”Ok ok.. , it’s not ideal, I admit, but it’s the best way to avoid sinking time into obvious false-positive candidates”

Ok. Ok. It’s understandable why you rely on this stuff for initial screening given the impracticality of thorough evaluations at scale, but as we’ve highlighted: many qualified, skilled engineers will either avoid you altogether, or they’ll submit to your little process and get rejected due to the narrow skills you’ve assessed. And those that succeed might often be very capable “isolated problem” solvers, but, on the job, perhaps not the best engineers. If you wanna hire good people, I’m sorry: it’s going to take time. You cannot automate it away. Dedicate human time to humans.

“Give me some alternatives then. I’m listening.”

Cool. And actually, belive it or not, it’s the basic stuff. Ya know: talking to people, collaborating with them, exposing them to the diverse challenges you experience in your problem domain. Chat with them as you would an existing colleague, not as a lowly imposter of as-yet unproven capability.

Here are some solid suggestions with increasing dedication of time, optimizing for both parties:

- Thoughtfully designed take-home projects (NOT TIME CONSTRAINED) that resemble open-ended challenges faced by your engineering team. Assess problem decomposition, system design, and solution clarity.

- Initially small and then more expansive portfolio reviews of past work and projects to evaluate real-world engineering skills and experience.

- Simulated pair programming or bug diagnosis sessions to evaluate collaboration, communication, and pragmatic problem solving.

- Open-ended behavioral interviews focused on engineering competencies, mindsets, and soft skills. Discuss tradeoffs, goal conflicts, team dynamics.

- Standardized evaluations of communication ability, technical writing, presenting, meeting facilitation and other essential collaborative skills.

- Tailored discussions of each candidate’s background to surface unique strengths and experiences beyond algorithms.

In conclusion.

I’m tired.