How to coerce a response with less up-front prompting

This is an easy prompt-engineering hack I encountered when building both pippy.app and veri.foo. It’s a very simple idea. It can work via any interface to ChatGPT and similar LLMs but is best via the API where you’re able to designate roles.

The idea is to prepopulate the LLM completion with the beginning of a response in order to force a certain type of content or format. Think of it like the ‘stop’ parameter, but instead of ‘stop’ it’s ‘begin’.

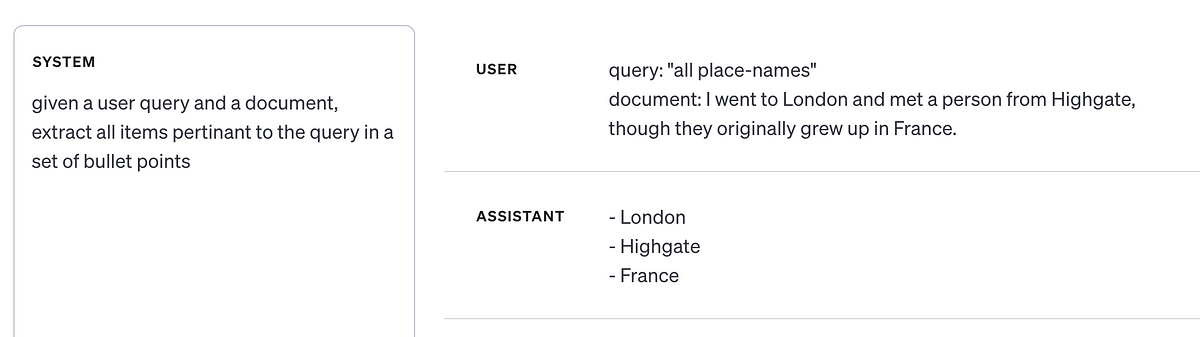

Let’s say you’re building a set of prompts for content extraction given a specific query. The SYSTEM prompt might be something like, “given a user query and a document, extract all items pertinant to the query in a set of bullet points.”

We can see it’s correctly identified three place-names from the document. However, it was our intention that the bullet points begin with an asterisk instead of a dash. (Obviously this is something more easily remedied in post-processing, but I just wanted a simple example for this article.)

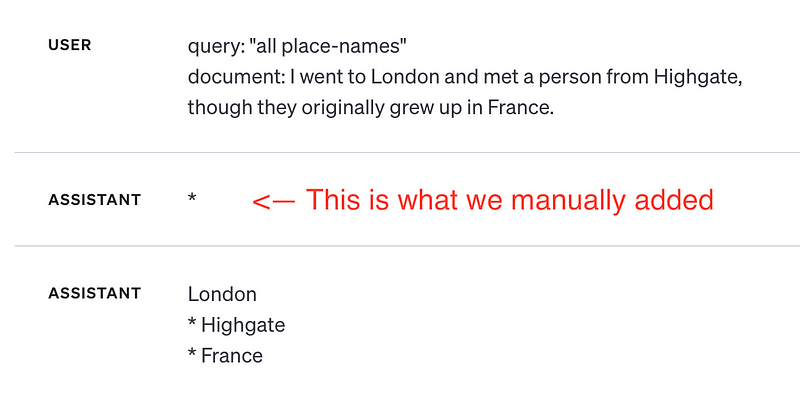

Here’s the trick: Simply insert your own ASSISTANT message in the prompt with the very beginning of what you would usually want the completion to look like. So, if using the API, the messages array might look like this:

[

{

"role": "user",

"content": "

query: \"all place-names\"\

document: I went to London and met

a person from Highgate, though they

originally grew up in France.\""

},

{

"role": "assistant",

"content": "* " // <--- we have added this

}

]Since we’ve prefixxed the “*” ourselves, the completion will more consistently come through as a bullet-pointed list with asterisks:

Then it’s just a case of concatenating our forced completion with the generated completion, I.e.

"* " + "London\n* Highgate\n* FranceThis is extremely useful if we’re finding ourselves having to repeat instructions many times in the SYSTEM prompt because the LLM is failing to give us what we desire. It’s just a tiny extra nudge that will increase the probabilities in the following tokens. Here’s a more fleshed out example where we’re asking for a more specific format: perhaps an XML-like format:

![SYSTEM prompt: given a user query and a document, extract all items pertinant to the query in a set of XML <place> elements with an id that is a lower-case version of the place-name. USER prompt: query: “all place-names” document: I went to London and met a person from Highgate, though they originally grew up in France. ASSISTANT prompt: `<! — the set of places I identified with lowercase id attributes → <place id=”` And finally, the completion provided by ChatGPT: `london”>London</place> [etc.]](https://cdn-images-1.medium.com/max/800/1*bjb-LL_a6NZ-AsHJJ47BrQ.png)

Again, a very contrived example, but I’ve found it to produce a much more reliable output, especially with structure formats/grammars.

Comment and questions welcome! ❤