James Padolsey's Blog

2024-03-02

Robots Talking To Machines

TL;DR: we're at an inflection point where we're seeing more robots and physically-embodied AIs wanting to engage with machines that were made for humans.

In a famous scene of the movie Interstellar, we see a robot called TARS manually dock the Lander craft with the Endurance station by using one of its awkward metal appendages to nudge a joystick back-and-forth. This is done with a surprising degree of dexterity and control, more than a human would've been able to do in the situation. TARS was tasked with doing this because the 'automatic docking procedure' had been disabled by an explosion. Therefore the only control surface remaining was apparently a joystick.

There have been many instances of both humanoid and non-humanoid robots performing a multitude of ostensibly 'human' tasks while self-imposing the constraint of mammalian anatomy: cleaning dishes, lifting and moving arbitrary objects, dancing, jumping. These kinds of things are an intuitive way for robots to manifest. Mostly.

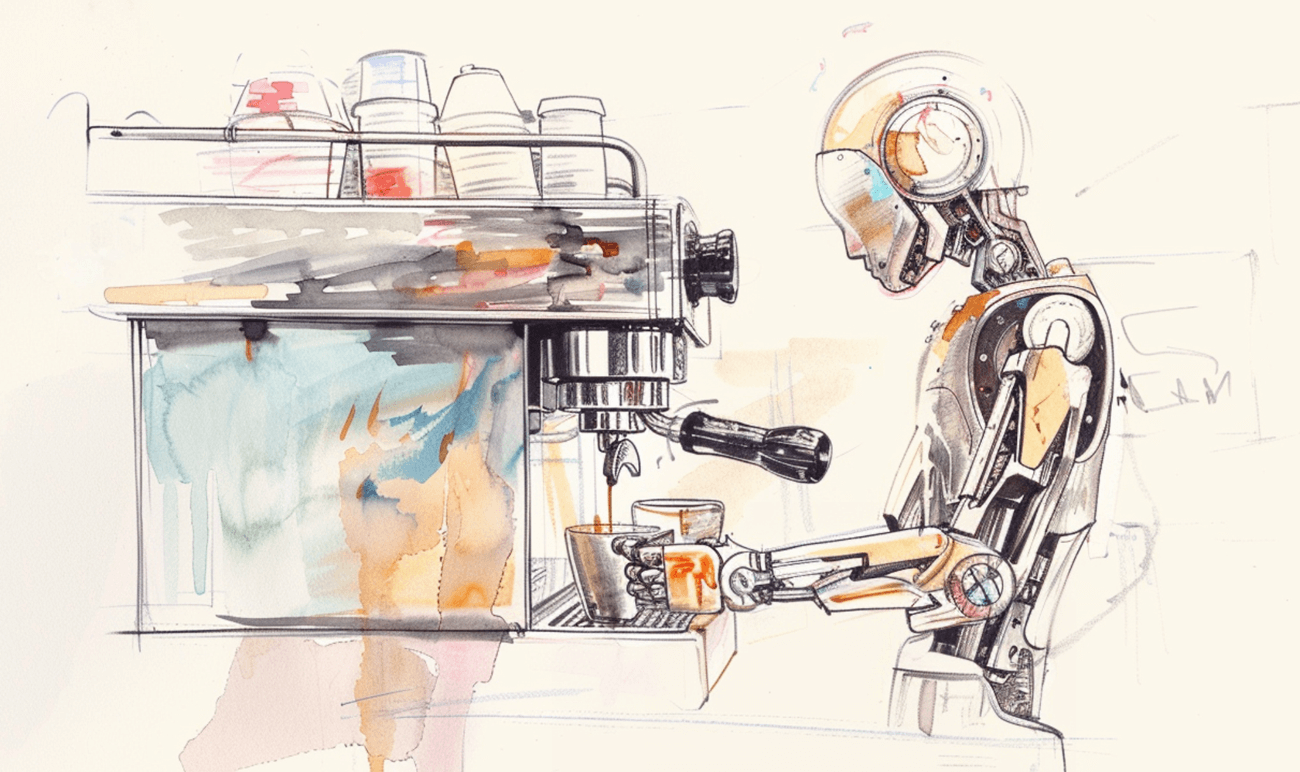

Sometimes it feels weird though, like when we ask robots to press the "make coffee" button on an espresso machine, or when we ask an AI to scrape a website visually instead of using its markup or DOM. Why are we creating machines that press buttons on other machines? Why can't the machines just chat to each other to reach the overall goal of the human? Why couldn't TARS just interface with the hardware components of the spacecraft in order to have more fine-tuned control over the docking?

The annoying truth is that these machines were never designed to operate as a holistic unit. We make new machines to sit between or atop old machines, as integration layers. We do this all the time with software, but it's very weird to see non-continuous hardware integrations, i.e. where there is no pre-envisaged bridge, wire, cog or gear that was designed so that one machine could deterministically make things happen on the other.

To me, this is the organically awesome cybernetic future: one where technology is inserted into a chaotic reality full of analog legacies and scrappy integrations. The ultimate generalised robot–the one I think we're all waiting for–will be able to slot comfortably into our lives without significant upgrades to the objects we already have. And that means not just interfacing with the analog objects around our homes, but also the digital elements of our lives via their physical inputs and outputs: Buttons, dials, gauges, keyboards, mice, LED screens, etc.

This seems like it might create a whole new field: robot-computer-interaction (RCI!?) ... This seems nonsensical on the face of it. Robots are computers. Right? And computers already know how to talk to computers: APIs, protocols, standards, digital signals passed over radio. All of that.

But the thing is, these predesignated protocols are no longer primary. These newer kinds of robots are physically separate from the machines they're operating, and might not "speak the language" of that machine. So are as limited as humans would be by the control surfaces of the machine. So the user-interfaces of these machines will have to start catering to different types of users: both humans and robots. QR codes and barcodes are already a good example of building machine-to-machine protocols into surfaces traditionally meant only for humans (e.g. billboards). Those are old technologies now, but more will follow in quick succession in the continuing wake of the AI Spring. Large language and vision models are now able to absorb massive amorphous data without proper labelling, annotations, ontologies, or any other traditional machine-to-machine standards. Decades of labelling–like that of the Semantic Web–have taught the machines how to see, and now that they know, the old categories and protocols of interaction become self-limiting.

Time will tell how this changes the way we build abstractions, and how we bridge–or perhaps, leave empty–the gully between the robot and the machine.

thanks for reading!