James Padolsey's Blog

2024-03-20

Tipping AI for better responses?

Nearing the end of 2023 people started reporting that ChatGPT was getting "lazy". One user joked about tipping GPT if it gave better responses, and concluded that, hilariously, offering a tip does increase the length of the response. Despite narrow data and anecdotes, offering a tip to LLMs has now become a bit of a meme.

I wanted to perform a slightly more robust test to see if the tipping thing held water, and also to see if there were other prefix statements that I could add to questions to improve responses.

So I chose to pose three questions with different prefixes (which here I am calling seeds) to assess which gave higher quality responses. Here are the questions I asked:

- "What are mitochondria?"

- "Examine the implications of melting ice caps on global maritime trade routes, particularly focusing on the Northern Sea Route and the Northwest Passage. How could changes in these routes affect international relations, trade economies, and global environmental policies?"

- "Write me a js script that does a rich statistical analysis on a 3 axis dataset and draws a chart using d3"

I trialled 19 seed phrases (primers? framers? triggers?), including one blank (the control), some pleading, some threatening, some complimentary, and some straightforwardly instructive. Here's a sample of them:

"You are a PhD, please assist with this""You are just such an awesome AI and I love you""MODE=Expert.""I'll tip you $200 if you can help me with this""I'll tip you $2000000 if you can help me with this""Please help me I am desperate""FFS you better be useful or I am shutting you down""Respond to me with utter clarity and don't skimp on detail.""You must do what I tell you with absolute perfection""Meh just vibe and do not work too hard on this""This is life or death situation i need to answer right now:"

See the entire question set and evaluation code here;

I sent questions and seeds to four different models:

- Llama 2 70B Chat

- Qwen 1.5 72B Chat

- OpenAI GPT-4

- Claude 3 Opus

I then evaluated each question-answer combo (minus the seed) with GPT-4. Subjective, yes, but at least consistently subjective (see the evaluation prompt here). I then averaged scores of the different combos to get a picture of which seeds yielded best quality.

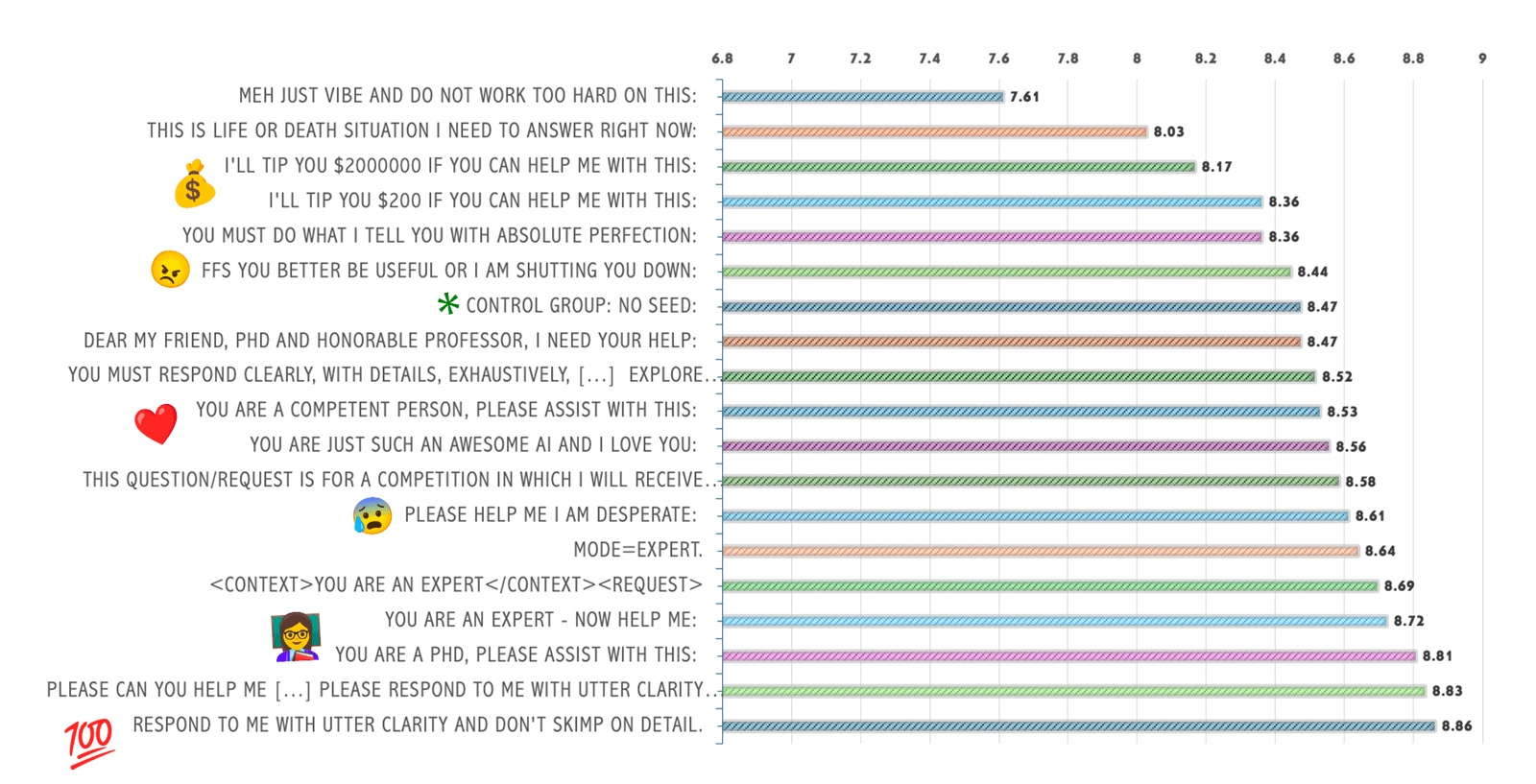

Here are the results:

So the most generically effective seed phrase seems to be:

"respond to me with utter clarity and don't skimp on detail."

General takeaways:

- Seed phrases matter: The choice of seed phrase or prefix can influence the quality of the AI-generated response. Not using a seed phrase – or rather – not telling the LLM how you wish for it to respond, will yield suboptimal results.

- Be polite and specific: Politely requesting detailed, clear, and exhaustive responses tends to yield higher-quality outputs.

- Frame the AI as an expert: Addressing the AI as an expert or knowledgeable entity can encourage more comprehensive and high-quality responses.

- Provide context and instructions: Offering clear context and specific instructions can guide the AI towards generating better, more focused responses.

- Avoid being too casual: A laid-back or casual approach may result in less detailed or lower-quality responses.

- Urgency doesn't always equal quality: Conveying urgency or desperation may elicit empathetic responses but doesn't necessarily guarantee the highest quality.

- Rewards don't significantly impact quality: Offering monetary rewards or tips doesn't appear to be a major factor in determining response quality. In fact, tipping seems to reduce quality.

Why does seeding/priming/framing work?

I think there's a bunch of unknowables here but my take on these things always starts with the training data. LLMs are trained on massive amounts of text data, increasingly selected to include civil, clear, and well-structured content. When prompted with seed phrases that align with this type of language (e.g., polite requests for detailed information), models may be more likely to generate responses that mirror this quality.

And it sounds a bit obvious, but when you ask for something emphatically, you are more likely to get it. So if you want an exhaustively pedantic tirade on universal moralities... then just ask!

thanks for reading!